The need for sustainable hardware

Scientists have predicted that by 2040, almost 50% of the world’s electrical power will be used in computing [1]. This projection was made before the sudden explosion of AI-based language models, so the actual share will be even higher if nothing changes in the approach. Simply put, computing will require colossal amounts of power, soon exceeding what our earth can provide. The projected computational performance will also be far outside the capabilities of even the most advanced CMOS-based compute and storage systems.

Take the advance of AI as an example. The total AI compute time, expressed in petaFLOP/s-days, has grown with a factor of 1800 in the last two years [2]. Changing process technology nodes and consecutive architectural improvements delivered only a factor of six in growth. The lion’s share was provided by adding more data centers, hence increased energy consumption. From a cost and environmental perspective, this is unsustainable.

That is, unless new compute systems can be devised that can deliver significantly higher gains in energy efficiency and computational density. This is what superconducting digital technology brings to the table, by taking advantage of the near-zero electrical resistance of materials at cryogenic temperatures. Initial calculations predict a hundred times greater energy efficiency and a thousand times larger compute density than what state-of-the-art CMOS-based processors can offer [3].

Accelerating the roadmaps for deep learning and quantum computing

Superconducting digital technology is not envisioned to replace conventional technologies, but to complement CMOS in selected application domains, and fuel innovations in new areas.

It will support the growth in AI and ML processing and help provide cloud-based training of big AI models, but in a much more sustainable way. In addition, with this technology, data centers with much smaller footprints can be engineered. And it may also change the paradigm of how compute is done today. It enables energy efficient, compact servers located close to the target application, bridging between edge and cloud. The potential of such transformative server technology makes scientists dream. It opens doors for on-line training of AI models on real data that are part of an actively changing environment, such as in autopilot systems. Today, this is a challenging task, as the required processing capabilities are only available in far-away, power-hungry data centers. Enabling real-time interactions while still being able to talk to the cloud will benefit many application areas, including smart grids, smart cities, mobile healthcare systems, connected manufacturing, and smart farming.

Figure 1 – Illustration showing the potential of superconducting technology for transformative server applications in a smart city context. (Artist impression by imec, partly AI generated.)

Furthermore, elements from superconducting technology might benefit quantum integration in general. Scalable instrumentation needs to be developed to interface with the growing number of qubits, to readout and control them, provide error-correction and data processing capabilities. Due to its compatibility in performance scales, in operating temperature, in energy levels, in materials and fabrication processes, superconducting technology can offer a unique solution that is unachievable in other technologies.

System-technology co-development: a key requirement

The promised leap in energy efficiency and computational density will be the result of introducing superconducting materials and new devices, thereby changing the fundamental technology base of classical digital technologies. However, the gains obtained by material and device innovation can easily be undone by bottlenecks at the module or system level. To ensure that these are propagated effectively across the entire system, innovations across the system stack – from core device components to algorithms – are being co-designed from the outset. This requires a continuous, iterative loop between top-down system-driven choices and bottom-up maturing of technology and integration. Such a system-level approach embraces the fact that solutions are driven by application requirements, and by their implementation constraints and workloads.

For example, one might ask whether the promised energy and cost efficiency of the cryogenic device technology could not be easily undone by the required cooling overhead. To answer that question, researchers at imec have modeled and compared the electricity cost of both a traditional AI system and a cryogenic, superconducting-based AI system, for different computational scales (i.e., number of petaFLOPS). At larger scales, from tens to hundreds of petaFLOPS, cooling overhead starts to reduce significantly, and the superconducting-based system becomes more power efficient than its classical cousin. In other words, the larger the scale of operation, the higher the gains. The cooling can be delivered by commercially available cryocoolers, the size of three traditional server racks.

A data centre the size of a shoe box

Researchers at imec used physical design tools to find an optimal functional partitioning of a superconducting-based system for AI processing. A zoom in on one of its boards reveals many similarities with a classical 3D system-on-chip obtained via heterogeneous integration of CMOS-based technologies. The board is similarly populated by computational chips, a CPU with embedded SRAM-like cache memories, DRAM memory stacks and switches – interconnected via Si interposer or bridge technologies.

Figure 2 – Schematic cross section of a board envisioned for superconducting-based AI processing. Superconducting technology building blocks are heterogeneously integrated using advanced 3D and Si interposer technology.

But there are also some striking differences. In classical CMOS-based technology, it is very challenging to stack computational chips on top of each other because of the large amount of power dissipated within the chips. In superconducting technology, the little power that is dissipated can efficiently be handled by the cooling system. Logic chips can be directly stacked using advanced 3D integration technologies resulting in shorter and faster connections between the chips, and area benefits.

Multiple boards can be stacked on top of each other, with only a small spacing between them. A power-performance-area estimate of a 100-board stack reveals a system that can perform 20 AI exaFLOPS (BF16 dense, or 16-bit floating point format dense – equating to 80 exaFLOPS FP8 with sparsity, as often referred to for GPU-based servers), which is outperforming the state-of-the art supercomputer in today’s data centers. What’s more, the system promises to consume only 1 kilowatt (kW) at cold temperature and 500kW of equivalent room temperature power, has an energy efficiency of more than 100 TOPS (terra operations per second) per Watt, all within the footprint of a shoe box.

On the technology side: fundamentals, building blocks, and state of the art

To understand why superconducting digital technology is so energy efficient and how it can be used to build digital electronics, we shed light on the physics behind it.

In conventional processors, much of the power consumed and heat dissipated comes from moving information among logic, or between logic and memory elements rather than from actual operations. Interconnects made of superconducting material, on the contrary, do not dissipate any energy when cooled below their critical temperature. The wires have zero electrical resistance, and therefore, only little energy is required to move bits within the processor. This property of having extremely low energy losses holds true even at very high frequencies.

But how can such a technology give us bits of information, in the form of ones and zeros? In classical logic and memory systems, the ones and zeros are derived from the voltage levels of the analog signals. In superconducting elements, the zero resistance rules out voltage drop. Data encoding is therefore done in a different way. It relies on the Meissner effect, a physical phenomenon involving the exclusion of magnetic fields from a superconductor material when this is brought below its critical temperature. When a superconducting ring is placed in a magnetic field, current starts to flow inside the ring to expel the field and ensure that the enclosed magnetic flux inside the ring is a quantized unit. This magnetic flux quantum can be used as the basis for encoding information. Other than voltage ranges used in classical computing, this quantum bit is fundamentally accurate.

Evoking a magnetic flux quantum inside a superconducting ring can serve as a basis for building a superconducting memory element. Once generated, the quantum bit can stay forever. To perform digital operations, we also need an active device, a switch able to change the state of the ring. For that, we can rely on the Josephson effect. This effect is exploited in the so-called Josephson junction, which sandwiches a thin layer of a non-superconducting material between two superconducting layers. Until a critical current is reached, a supercurrent flows across the barrier. When the critical current is exceeded, a tiny voltage pulse develops across the junction, dissipating only 2x10-20J in a picosecond timeframe. This effect can be used to build data encoding schemes, for example, by defining a digital one for positive pulse when the superconducting phase transitions from low to high, and a digital zero for negative pulse when it reverts from high to low. During operation, only the ‘tiny’ switching events contribute to the system’s power dissipation.

These three physical phenomena – zero resistance, Meissner effect, and Josephson effect – form the basis for building superconducting digital electronics: superconducting logic chips, Josephson SRAMs, zero-resistance interconnects, switches, complemented with tunable metal-insulator-metal capacitors (MIMCAPs) for power delivery, and cryo-DRAM [4, 5]. And although the materials and operation principles are fundamentally different from CMOS-based technologies, the logic operations and data encoding have many similarities. Traditional EDA tools can be used in the design phase, superconducting CPUs can be synthesized on traditional code, and multichip modules (MCM) can be built. Superconducting electronics does not represent a new computational paradigm. It’s a classical technology, which has been around for a long time. Throughout the years, a variety of high-performing, energy efficient 8-bit and 16-bit CPUs, and MCMs have been published.

Imec’s superconducting technology scaling roadmap: key enablers and first milestones

The fabrication processes and materials that are used to build today’s superconducting CPUs do not offer the possibility to scale to the compute density required for disrupting the AI and ML roadmaps. Imec has the ambitious goal to scale from today’s 0.25µm lithography node to a 28nm node.

When scaling superconducting wires down to 50nm physical dimension, the product of clock speed and device density becomes comparable to what a 7nm CMOS logic technology node can offer. But in terms of interconnect performance, expressed as Gb/line, the 28nm superconducting technology is envisioned to outperform 7nm CMOS by two to three orders of magnitude, while being about 50 times more power efficient.

Figure 3 – A power-performance-area comparison of 7nm CMOS and 28nm superconducting digital technology for commercial AI/HPC (high-performance compute).

Two key enablers will help reach the scaling target. First, the processing is transferred to imec’s 300mm cleanroom to take advantage of the processes and equipment that have enabled the continued scaling of CMOS technologies. It gives the researchers access to unique capabilities such as 193nm immersion lithography for patterning the structures, and to advanced integration schemes such as semi-damascene for building the interconnect layers. Second, Nb, the superconducting material in use today, is replaced by NbTiN, a superconducting compound with significantly better scaling potential. This material is used to build the interconnects and to fabricate new types of Josephson junctions and MIM capacitors. Unlike Nb, NbTiN can withstand the temperatures used in standard CMOS recipes and reacts much less with its surrounding layers.

Imec focuses on developing modules for the MIM capacitors, Josephson junctions and interconnects, and validating them at cryogenic temperature. Recently, the researchers demonstrated short loop devices with NbTiN-based metal lines and vias fabricated in imec’s 300mm cleanroom, using a direct metal etch, semi-damascene approach. The fabricated wires are only 50nm wide, are chemically stable, have a critical current density of 100mA/µm2, and critical temperature of 14K – a world’s first. The ability to scale down to 50nm critical dimension with excellent process control sets solid foundations for fabricating two-metal-level superconducting interconnects. The feasibility of such a two-metal-level scheme has also been demonstrated. The module is conceived in such a fashion that it allows for multi-level extension due to the planarized individual layers that build up the metal and via strata. This in turn enables stacking of multiple metal layers on top of each other resulting in an interconnect fabric. The wire and via results were presented at the 2023 IEEE International Interconnect Technology Conference (IITC) [6].

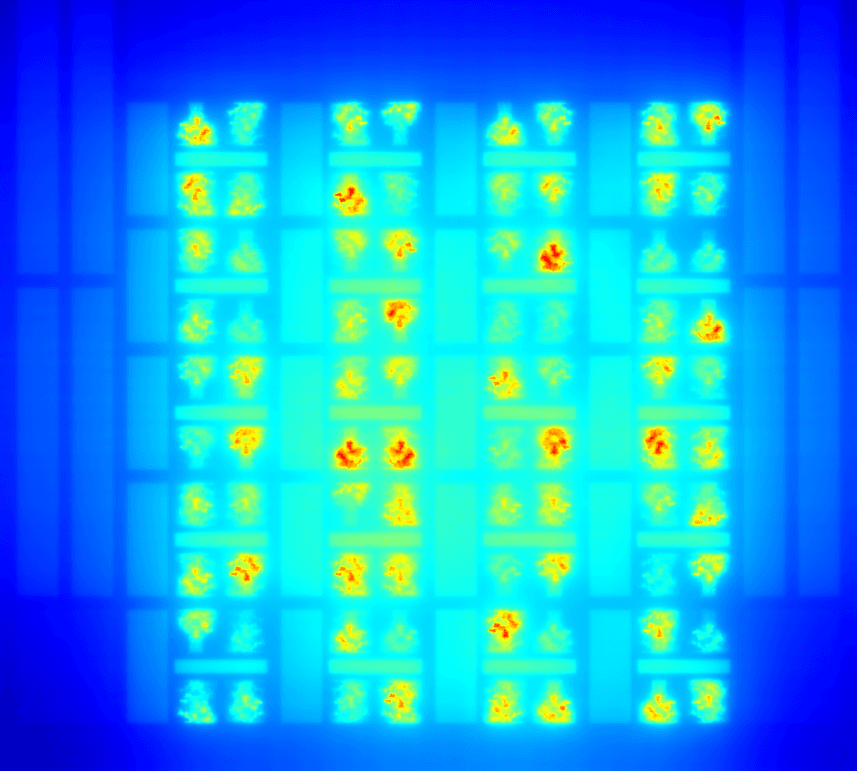

The two-metal-level results provide the groundwork to explore the possibility of embedding innovative superconducting digital logic devices, such as tunable HZO capacitors with NbTiN electrodes and Josephson junctions with αSi barrier and NbTiN electrodes. Progress has been made in the development of superconductor HZO capacitors with NbTiN electrodes using a fab recipe similar to imec’s standard room-temperature HZO capacitors with TiN electrodes. For the αSi-Josephson junctions, the integration of the new NbTiN electrodes resulted in an improvement over previously reported αSi-junctions with Nb electrodes. Using imec’s advanced cleanroom facility allows for good-quality interfaces and sufficient within-device uniformity control that are critical for proper functionality. Cross-sectional examples of the new interconnects, HZO capacitors and Josephson junctions are shown below.

Figure 4a – TEM images of (left) patterned metal-1 (M1) and metal-2 (M2) NbTiN interconnect lines and via; (right) HZO capacitor with NbTiN electrodes.

Figure 4b – Josephson junction with 210nm critical dimension (CD).

Besides scaling down Josephson junctions and interconnect dimensions over three subsequent generations, imec’s roadmap extends to 3D integration and cooling technologies. For the first generation, the roadmap envisions the stacking of about 100 boards to obtain the target performance of 20 exaFLOPS BF16 dense (80 exaFLOPS FP8 with sparsity). Gradually, more and more logic chips will be stacked, and the number of boards will be reduced. This will further increase performance while reducing complexity and cost.

Figure 5 - Imec’s superconducting technology scaling roadmap, spanning three technology generations.

Conclusion

Superconducting digital systems are envisioned to disrupt the AI and ML roadmaps. They promise a major leap in energy efficiency and computational density, which is rooted in the physics behind the superconducting technology. System-technology co-optimization is necessary to ensure that the gains hold for the entire system stack. On the technology side, a roadmap based on scaling down Josephson junctions and interconnects, on increasing clock frequency, and on stacking boards and individual logic chips will allow to down-size data servers to the size of a shoe box. First milestones have been achieved in scaling down NbTiN-based interconnect wires, HZO capacitors and αSi-Josephson junctions. For all three process modules, using materials and integration schemes compatible with CMOS fabrication is a key to success.

This research was also covered in IEEE Spectrum.

Want to know more?

[1] Semiconductor Industry Association. “Rebooting the IT Revolution: A Call to Action.” Retrieved March 14 (2015): 2019.

[2] https://openai.com/research/ai-and-compute

[3] ‘Superconducting digital technology: enabling sustainable hardware for deep learning and quantum computing’, Anna Herr, presented at the 2022 imec technology forum (ITF) USA

[4] ‘Scaling NbTiN-based ac-powered Josephson digital to 400M devices/cm2’, A. Herr et al., arXiv preprint arXiv:2303.16792 (2023).

[5] ‘Superconducting pulse conserving logic and Josephson-SRAM’, Q. Herr et al., Appl. Phys. Lett. 122, 182604 (2023)

[6] ‘Towards enabling two metal level semi-damascene interconnects for superconducting digital logic: fabrication, characterization and electrical measurements of superconducting NbxTi(1-x)N’, A. Ponkhrel et al., 2023 IITC

More about these topics:

Published on:

4 June 2024