Peter Schelkens, group leader of ETRO, an imec research group at Vrije Universiteit Brussel (VUB), & expert in multidimensional signal processing and digital holography

2017 was the year in which artificial intelligence (AI) and new display technologies, such as augmented reality (AR), broke through to the general public. The hype surrounding AI reached a peak and is now part of our day-to-day lives, although sometimes we don’t even realize it. Just think of Siri, the sophisticated assistant with voice recognition from Apple, or the personal recommendations that Google and Netflix dish up to us on a daily basis. There are also lots of devices on the market already that use various new display technologies. Microsoft’s HoloLens, for example, has brought AR into our living rooms. But most of the commercial products with these new technologies are still expensive and need to demonstrate that they are more than just gadgets. But having said that, it has been a promising beginning.

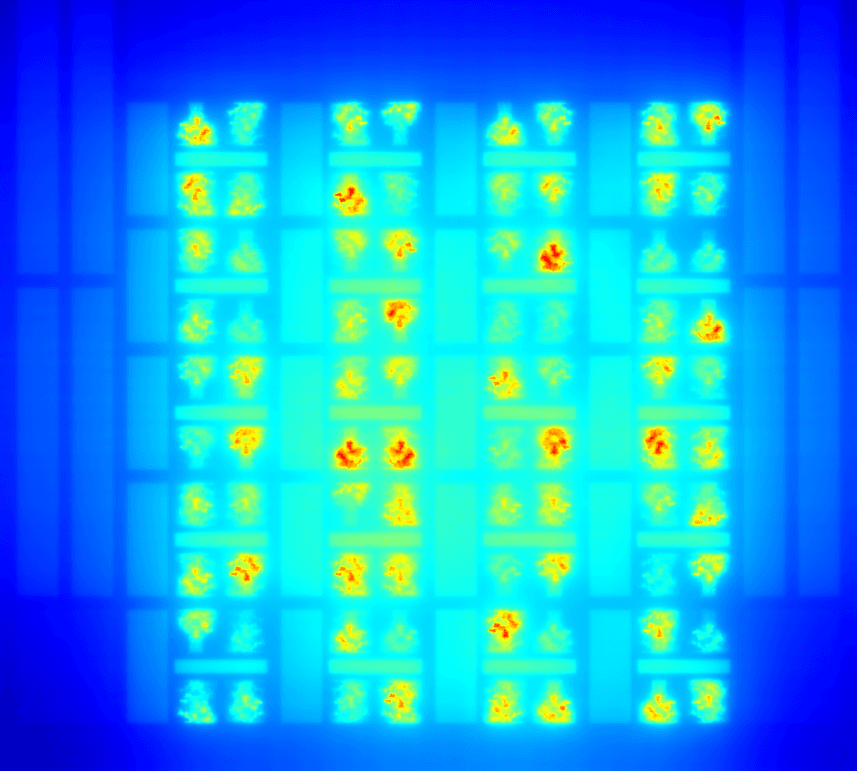

AI and AR are areas in which imec is also involved. In the neurosurgery and orthopedic surgery department at the University Hospital (UZ) Jette, for example, we look into the introduction of AR glasses during surgery, so that surgeons could access additional patient information during procedures. This information is projected directly onto the patient’s body. In the European project TherapyLens this technology is introduced in revalidation therapy. Together with Holst Centre, we also recently presented a novel sensing technology to track eye movement in real time. Imec’s self-learning neuromorphic chip attracted a great deal of attention at the Imec Technology Forum (ITF), not just due to its great number-crunching compute power, but also because of its very low energy consumption. The imec Cell Sorter chip, finally, combines both technologies: holographic image-processing for on-chip cell detection, and AI to classify the cells correctly. The unique power of imec lies in its access to expertise in every possible area of application.

Liesbet Lagae, Program Director Life Science Technologies at imec, with the Cell Sorter chip. In 2014, she received an ERC grant for this research.

The makeable brain

Of great interest for micro-electronics are the recent strides made in neuromorphic computing. Our brains are perfect computers for recognizing patterns and processing information from our senses. Neuromorphic chips meet the challenge of imitating the complexity of this neural network in a so-called ‘deep neural network’, in an energy-efficient way. The ultimate aim is to simulate the way neurons work as is the case with deep learning software. Once we can do that, we will be able to integrate this architecture in a chip for neuromorphic computing in sensors and for AI-applications. Imec is already well on the way with the chip that we presented at ITF. This self-learning chip has the ability to compose music, while consuming only very little power. There is a real pioneering role for us to play in this area.

This may be a beginning, but there is still a long way to go before we have silicon “brains”. The deep neural networks only simulate part of our brain activity. They are based on the visual part of data-processing and model it as a sequential pathway from the eyes to the visual cortex, where the information is processed. In reality, of course, this does not happen sequentially. But only if we understand how the brain works, we will be able to make any further progress. Also, current neuromorphic systems are still very dependent on the algorithms that we develop for them. At the moment they lack human creativity, emotions and our ability to reason when making moral decisions. Although progress is slowly being made, in the mean time we still need to learn how to cope with the limitations of AI. At the same time, we also need to take a close look at both the ethical and social issues involved.

Best of both worlds

With innovations in optics and photonics, the move towards miniaturization in AR is well underway. Thanks to technologies such as freeform optics and optical waveguides it is already possible to produce compact display devices, such as head-mounted displays. More miniaturization is on its way, but real breakthroughs are only possible by integrating hardware and software in the same way as with AI. 2017 was the year in which developments in algorithms and innovations in hardware began to work in each other’s favor.

These once separated worlds now need co-optimization to allow for the next-generation products. Imec has expertise in both worlds and so is ideally placed to make this happen.

Integration is also important for processing the huge quantities of data involved with the new display technologies. A 4K 3D display with 100 viewing angles already has to control 800 million pixels; for holographic displays – depending on the supported angular field of view – it is even orders of magnitude more. Al needs to be able to place this information accurately, efficiently and with very short latency times on a display. For that to happen, we not only need to make progress in signal-processing, but also in the display technology itself. For example, with AI we can try to predict how a person will move in a certain virtual environment and where his gaze will turn to. We then only need to supply the data for those viewing angles. Light-field technologies, which direct light in a specific direction through a system of lenses and for which the first commercial products are already available, will probably be a stepping stone to holographic technologies.

Translation to industrial application

AI technology may well be promising, but integrating it into industrial applications requires a major reorganization for candidate-companies. 80% of resources will be spent on enhancing the quality of their data to a level that is suitable for injecting it into a deep learning network. All internal processes need to be geared toward achieving that aim. Companies will also have to hire a data officer so that they can transform themselves into a data farm where all the data generated can be organized correctly. This means that every company will have to assess as to whether it can – and wants – to make the investment required, depending on the generated performance gain and improved value proposition for its products.

The situation with AR is totally different. This technology is already mature enough to be used in a company as it is. And it will even become important for the manufacturing and maintenance sectors. A good illustration of this is the imec.icon ARIA project in which AR is used to carry out maintenance tasks. Imec is working on this with the chemical company Evonik at the Port of Antwerp. There we work towards a solution in which technicians use AR glasses that project digital information as an extra level on reality. By also using deep neural networks, we have succeeded in recognizing objects in this specialized industrial environment. For example, we are able to display step-by-step instructions to an engineer carrying out maintenance work on the facilities, while at the same time adhering to the relevant safety procedures. This strategy increases the efficiency in the company by significantly reducing the training time required in the company while providing ongoing support. The ARIA project gives us a sneak peek at what the future holds: the integration of AI and AR may make a real difference in industry. It also shows us how important the role of imec is in this revolution: we are, after all, uniquely positioned to bring together the various areas of expertise required.

Want to know more?

- Read more about ARIA (Augmented Reality for Industrial Maintenance Procedures) and TherapyLens (Augmented Reality in Rehabilitation)

- Read the press release on the novel sensing technology to track eye movement in real time

- Read the press release on imec’s self-learning neuromorphic chip

Peter Schelkens currently holds a professorship at the Department of Electronics and Informatics (ETRO), an affiliated research group of imec at Vrije Universiteit Brussel (VUB). His research interests are situated in the field of multidimensional signal processing with a strong focus on cross-disciplinary research. In 2014, he received an EU ERC Consolidator Grant for research in the domain of digital holography. Peter Schelkens is member of the ISO/IEC JTC1/SC29/WG1 (JPEG) standardization committee, where he is chairing the JPEG PLENO standardization activity targeted at light field, holography and point cloud technologies. He is associate editor of IEEE Transactions on Circuits and Systems for Video Technology and Signal Processing: Image Communication.

More about these topics:

Published on:

15 December 2017