CMOS technology has revolutionized the electronics industry by balancing performance, energy efficiency, and affordability. The System-on-a-Chip (SoC) paradigm has allowed a general-purpose approach to drive increasingly complex systems, integrating an ever-larger number of transistors on a single chip. This also enabled high-volume and low-cost production, boosting affordability of electronics, as predicted over half a century ago by the late Gordon Moore.

Moore stated that the number of transistors on a semiconductor chip would double every two years, a trend that would drive the development of increasingly powerful and efficient electronic devices. Simply put, you make things better by making them smaller.

This immense focus on miniaturization and general-purpose design has been central to the huge success of CMOS over the past few decades, but today approaches its physical limits.

CMOS scaling hits multiple roadblocks

While the SoC approach offers maximal energy efficiency, it has pushed system architects to accumulate a large set of complex functions within the CMOS platform. The optimization of multi-core architectures born in the 2000s has led to the rise of a large diversity of compute engines, from the initial CPU to GPU split, to different power optimized processors, and further to different types of accelerators. The memory subsystem within the SoC also diversified extensively over the years, resulting in complex hierarchies and a variety of access mechanisms.

The driver behind this continuous optimization is the need to optimize the compute system to the type of tasks, or workload, it has to execute, each being highly specific to the target application. It is quite remarkable that such an evolution was even possible within a single technological platform, and as it stands, several important obstacles impede its further evolution:

- We are witnessing large advances in chip-to-chip electrical interconnects driven by pitch scaling of micro-bumps and hybrid bonding which allows fine-grained partitioning of SoC functions. Advances in Si-photonics-based optical interconnects and 3D interconnect enabled co-packaging, providing high-bandwidth, low-power optical connectivity at much shorter length scale. This begs the question whether an SoC approach still holds its original energy efficiency advantage. A split into multiple dies could offer large benefits in cost and performance optimization.

- The diversity of applications, requiring advanced technologies to push the boundaries of compute performance, is bringing CMOS to the limit of what it can offer as a general platform. Designers now need to work around the constraints of the single platform, which sometimes leads to large inefficiencies.

- Holistic scaling solutions throughout the CMOS platform are becoming increasingly difficult to attain. For example, 2-nm nanosheet technology will see traditional thick-oxide IO circuits move out of the SoC. The SRAM does not scale as much as logic, and power in the SoC needs to be distributed through a back-side interconnect network as front-side interconnect resistance would become prohibitive.

- The node-to-node performance improvement of CMOS has also dramatically reduced due to transistor RC parasitics increasing faster than the drive strength. This is happening at a time when the design and wafer costs of advanced CMOS have significantly increased due to the complexity of design rules and process integration.

From general purpose to lottery

In this interesting context of changing technology and product needs, creative combinations have led to innovative solutions. Apple M1 Ultra, for example, essentially stitches two chips together through a silicon bridge, creating a hybrid SoC with unprecedented performance and capabilities.1 AMD increases memory capacity by 3D stacking an SRAM die on top of the original Processor SoC.2 In the AI sphere, super scaled-out processing systems such as the full wafer Cerebras’ WSE-2 and Nvidia’s large GPU die H100 combined HBM DRAM are pushing the boundaries of deep learning computing.3,4

The examples above illustrate how tech development is being pulled to extreme corners, depending on the specific needs of a given application space. All while new emerging applications such as augmented and virtual reality, 6G wireless, and autonomous vehicles, will require extreme performance improvements and power reductions. The workload and operating conditions will increase the diversity of implementations that CMOS is expected to support even further, forcing many more suboptimal compromises.

In other words, we are witnessing a failure for CMOS to fulfill its once so powerful role as general-purpose technology. Instead, we will end up in a situation where an application’s success will depend on how well the available CMOS meets its specific boundary conditions. Sara Hooker coined this ‘the hardware lottery’, indicating that the hardware determines which research ideas will succeed or fail.5

Co-optimizing system and technology

When the only tool you have is a hammer, it is tempting to treat every problem like a nail. The only way out of this conundrum is to expand the toolbox. In other words, we need technology platforms that are much more versatile, because the constraints—energy, cost, temperature, power density, memory capacity, speed—for mobile chipsets, for example, are very different from those for HPC or VR systems.

That is why we envision an entirely new paradigm, CMOS 2.0, driven by system technology co-optimization (STCO). STCO involves system designers working closely with technology teams to identify the most appropriate options instead of relying on off-the-shelf scaling options. The technology teams also need to have awareness of specific system specifications while developing the next-generation offerings. Diversity of applications, workloads and system constraints will need a broader palette of technology options.

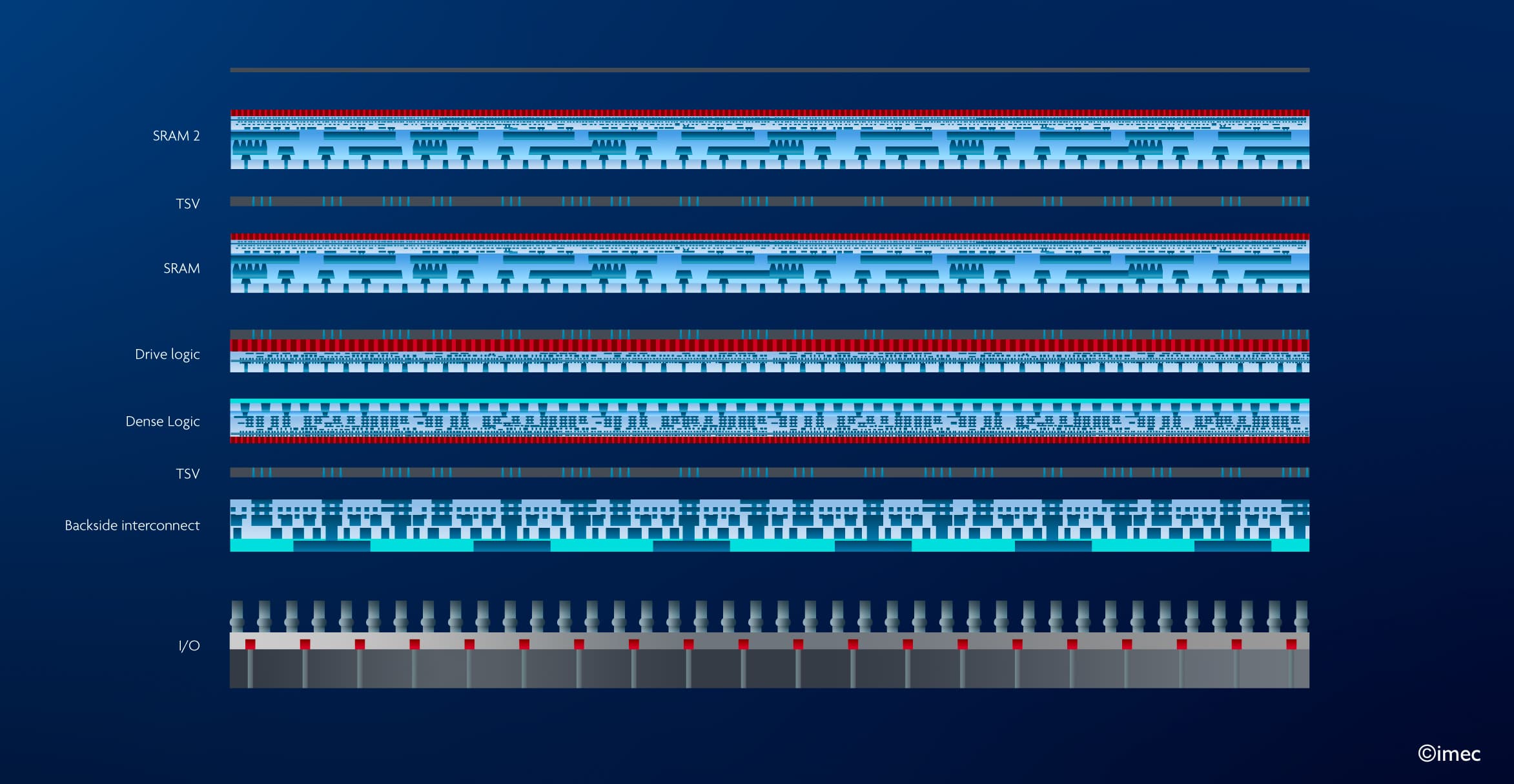

It requires rethinking the technology platform such that it intercepts the needs of a variety of systems and applications. CMOS 2.0 achieves this by enabling tailored chips, built out of a smart partitioning of its various functions in multiple 3D stacked layers (Figure 1).

CMOS2.0 has the same ‘look and feel’ as a classical CMOS platform

Unlike heterogeneous systems that we see today, where hybrid bonding addresses memory limitations, active interposers address bandwidth constraints and backside power distribution networks solve power issues, CMOS 2.0 takes a more revolutionary approach by bringing heterogeneity inside the SoC. It will have the same ‘look and feel’ as classical CMOS platforms, while offering more versatility for system optimization. The Dense logic layer will represent most of the cost and will still require scaling. However, other scaling constraints have now been physically removed to other layers.

The best of both worlds

CMOS 2.0 will leverage existing and new advanced 2.5D and 3D interconnect technologies like dense pitch Cu hybrid bonding, dielectric bonding, chiplet integration, wafer back-side processing as well as sequential 3D integration that involves heterogenous layer transfer. It will allow high interconnect granularity of SoC and the high technology heterogeneity offered by system in a package, essentially unlocking the constraints of conventional CMOS.

CMOS 2.0 will enable the use of low-capacitance, low-drive transistors to drive short-range interconnects while utilizing high-drive transistors in a separate layer to drive long-range interconnects. New embedded memories could be introduced as a separate layer in the cache hierarchy. It will also enable extreme BEOL pitch patterning for scaling without the constraint of voltage drop on power supplies. It will become easier to introduce non-silicon devices like 2D materials, novel embedded memories like MRAM or deposited oxide semiconductors since they are not burdened to meet the general-purpose CMOS specifications. For designers, the CMOS 2.0 platform will feel like conventional CMOS but with a significantly expanded and more versatile toolbox.

While dimensional scaling is no longer the only answer to push computing scaling, CMOS 2.0 will not remove the need for increased density. The scaling problem, however, will have to be tackled in a more holistic fashion, as the answer will be different depending on the application. High-density logic will optimize performance per Watt, while high-drive logic maintains bandwidth and performance in critical path. Devices that do not scale as well, such as dense logic-like thick-oxide IO, power switches, analog or MIMCAPs, can now be integrated in a separate layer using a more cost-effective technology node. Removing all SoC parts that were essential but not scalable also opens the door to a range of new types of devices.

The revolution has already begun

Backside power distribution networks are among the first signs that we are entering a new CMOS 2.0 era. All major foundries have announced they will move into integrated chips with power distribution systems on the wafer backside, which have become increasingly important for achieving high-performance and energy-efficient electronic devices. Access to wafer backside processing opens opportunities to integrate devices like power switches, migrate the global clock routing from front-side or add new system functionality.

Arguably, this paradigm shift offers a more complicated technological reality. How quickly will the EDA tools evolve? Will the cost and complexity of partitioning become prohibitive? Will the thermal properties of CMOS 2.0 platforms be manageable? Only time will tell. To quote the German philosopher and revolutionary Friedrich Engels “no one knows exactly the revolution he is creating.” At the same time, that is exactly what makes these times so fascinating. Navigating these uncharted waters will require close collaboration and co-innovation across the entire semiconductor ecosystem. At stake is not Moore’s Law per se, but the capacity it represents to enable economic growth, scientific progress and sustainable innovation.

This article was originally published in Nature Review Electrical Engineering.

References

1. Kenyon, C. & Capano, C. Apple Silicon Performance in Scientific Computing. 2022 IEEE High Performance Extreme Computing Conference, HPEC 2022 (2022) doi:10.1109/HPEC55821.2022.9926315.

2. AMD 3D V-CacheTM Technology | AMD. https://www.amd.com/en/technologies/3d-v-cache.

3. Lie, S. Cerebras Architecture Deep Dive: First Look Inside the Hardware/Software Co-Design for Deep Learning. IEEE Micro 43, 18–30 (2023).

4. Choquette, J. NVIDIA Hopper H100 GPU: Scaling Performance. IEEE Micro 43, 9–17 (2023).

5. Hooker, S. The Hardware Lottery. CoRR abs/2009.06489, (2020).

Julien Ryckaert received an M.Sc. degree in electrical engineering from the University of Brussels (ULB), Belgium, in 2000 and a Ph.D. degree from the Vrije Universiteit Brussel (VUB) in 2007. He joined imec as a mixed-signal designer in 2000, specializing in RF transceivers, ultra-low power circuit techniques, and analog-to-digital converters. In 2010, he joined the process technology division in charge of design enablement for 3DIC technology. Since 2013, he oversees imec’s design-technology co-optimization (DTCO) platform for advanced CMOS technology nodes. In 2018, he became program director focusing on scaling beyond the 3nm technology node and the 3D scaling extensions of CMOS. Today, he is vice president of logic and in charge of compute scaling.

Sri Samavedam is Senior Vice President of semiconductor technologies at imec since August 2019, oversees programs in logic, memory, photonics, and 3D integration. Previously, he was senior director at GlobalFoundries, leading 14nm FinFET technology into production and developing 7nm CMOS. Starting his career at Motorola, he worked on strained silicon and other advanced materials. He holds a Ph.D. in Materials Science and Engineering from MIT and a master's from Purdue University.

More about these topics:

Published on:

17 January 2024