Sensor data fusion

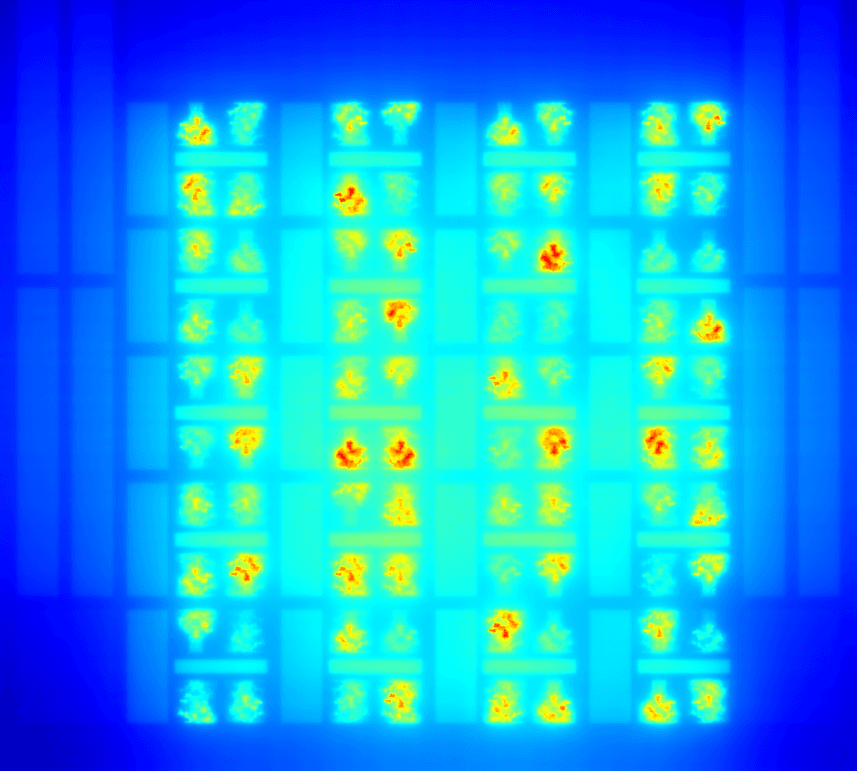

Smart fusion of data from diverse sensors enables safer, faster and more precise automated vision and recognition. Imec’s researchers develop next-generation sensor fusion through AI-based cooperative algorithms and dedicated neuromorphic hardware.

In applications such as autonomous driving, surveillance and robotics, high reliability and security are constant concerns. From that point of view, combining sensor data has a lot of advantages:

It allows for a wider coverage of a scene, e.g. from various angles, with various depths, or in various spectra.

It provides more robust, redundant information.

Most importantly: it will result in an actionable view under any circumstances. That includes even difficult ones such as night, rain, fog or backlight. This is because different types of sensors have different failure modes.

But here’s the challenge. A conventional fusion of raw sensor data will require a huge amount of memory and computational bandwidth. Late fusion on preprocessed data, in contrast, risks overlooking meaningful details. Or it takes too long to notice them, because of its high latency. This may compromise the reliability and safety of the applications.

New sensor fusion strategies

Imec’s researchers are opening up new roads. And they tackle the challenge with both software and hardware:

- They develop cooperative fusion using machine learning. This maximally boosts the results that the combination of a range of sensors can deliver. Find out more

- They develop new neuromorphic hardware. This enables a next leap in precision and energy efficiency. Find out more

Are you involved or interested in improving machine sensing and vision? Come and discuss to see how your application can benefit from the latest developments in these domains.